I must confess to getting a little sick of seeing the endless stream of articles about this (along with the season finale of Succession and the debt ceiling), but what do you folks think? Is this something we should all be worrying about, or is it overblown?

EDIT: have a look at this: https://beehaw.org/post/422907

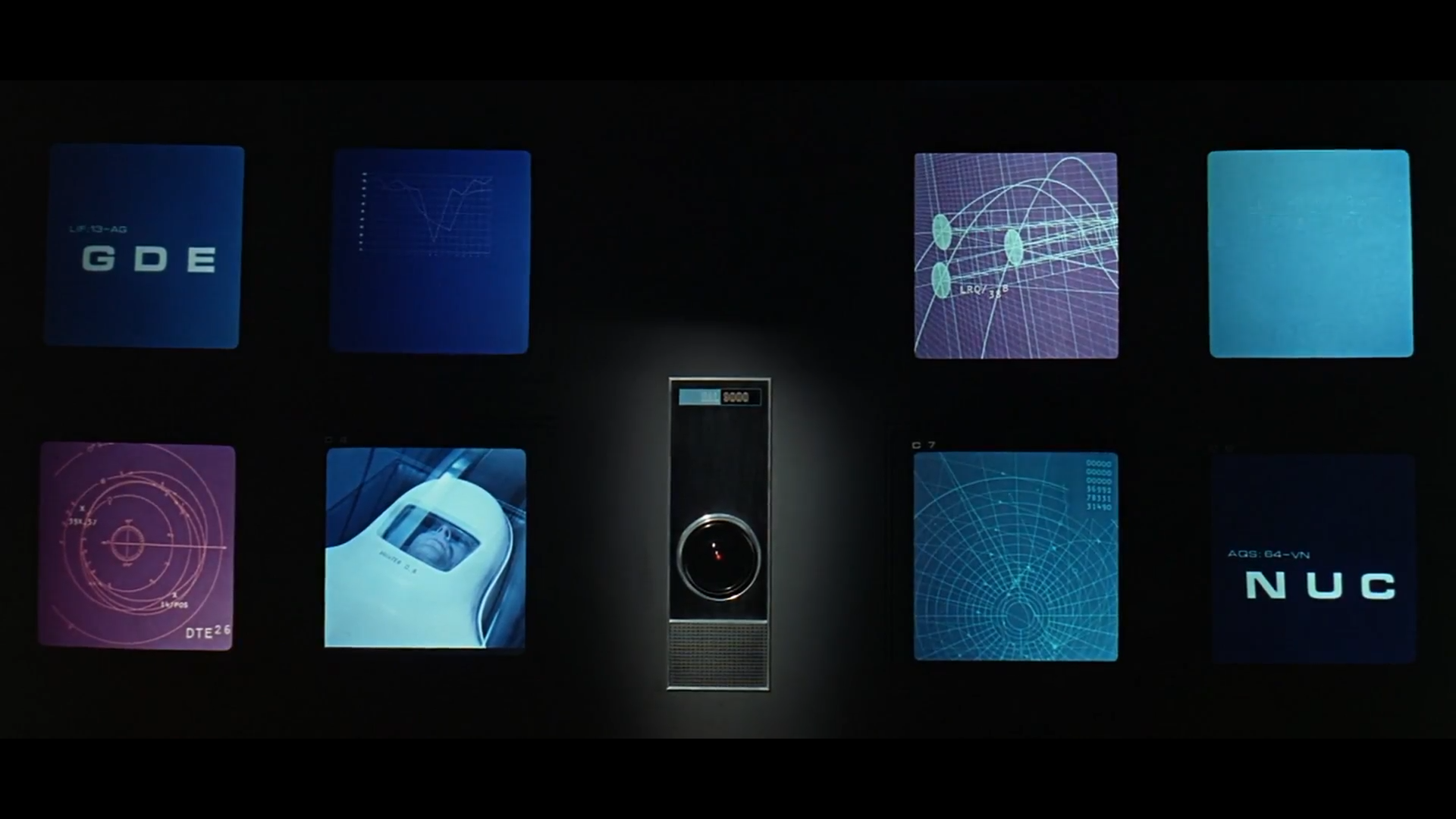

This video by supermarket-brand Thor should give you a much more grounded perspective. No, the chances of any of the LLMs turning into Skynet are astronomically low, probably zero. The AIs are not gonna lead us into a robot apocalypse. So, what are the REAL dangers here?

- As it’s already happening, greedy CEOs are salivating at the idea of replacing human workers with chatbots. For many this might actually stick, and leave them unemployable.

- LLMs by their very nature will “hallucinate” when asked for stuff, because they only have a model of language, not of the world. As a result, as an expert said, “What the large language models are good at is saying what an answer should sound like, which is different from what an answer should be”. And this is a fundamental limitation of all these models, it’s not something that can be patched out of them. So, bots will spew extremely convincing bullshit and cause lots of damage as a result.

- NVidia recently reported the earnings they’ve gotten thanks to all this AI training (as it’s heavily dependent on strong GPUs), a trillion dollars or something like that. This has created a huge gold rush. In USA in particular is anticipated that it will kill any sort of regulation that might slow down the money. The EU might not go that route, and Japan recently went all in on AI declaring that training AIs doesn’t break copyright. So, we’re gonna see an arms race that will move billions of coin.

- Both the art and text AIs will get to the point where they can replace low level people. They’re not any danger towards proper experts and artists, but students and learners will be affected. This will kill entry position jobs. How will the upcoming generations get the experience to actually become trained? “Not my problem” will say the AI companies and their customers. I hope this ends up being the catalyst towards a serious move towards UBI but who knows.

So no, we’re not gonna see Endos crushing skulls, but if measures aren’t taken we’re gonna see inequality go way, WAAAY worse very quickly all around the world.

A lot of the fearmongering surrounding AI, especially LLMs like GPT, is very poorly directed.

People with a vested interest in LLM (i.e shareholders) play into the idea that we’re months away from the AI singularity, because it generates hype for their technology.

In my opinion, a much more real and immediate risk of the widespread use of ChatGPT, for example, is that people believe what it says. ChatGPT and other LLMs are bullshitters - they give you an answer that sounds correct, without ever checking whether it is correct.